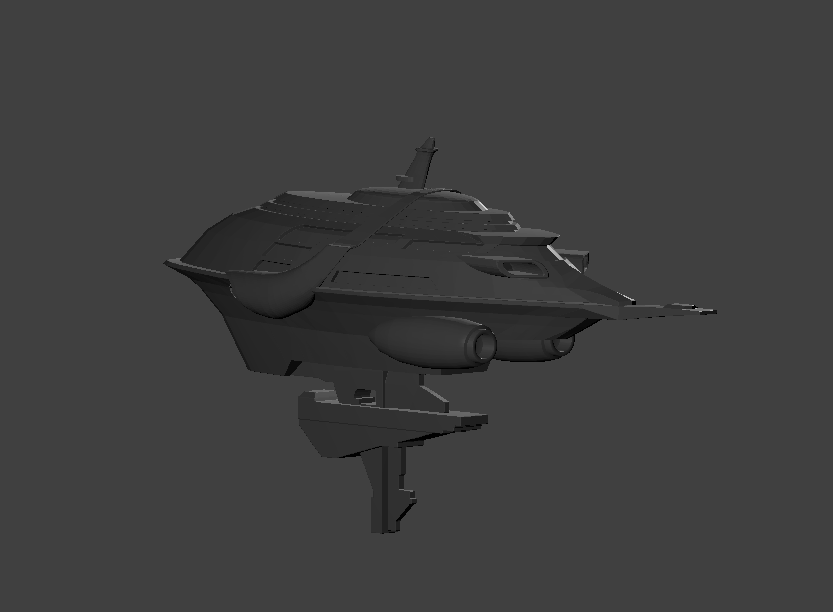

Mech Combat Mini

/Download Alpha 1.5 :

Download, leave feedback in the comments below, and come back to try out the next version.

Multiple weapon types

version Alpha 1.4 brought damage and grenade indicators

Maps will be generated from Actual map data

Q: What is the purpose?

A: Make a small action mech combat game and have a playable version finished in one month

Q: How is it made?

A: Using Unity game engine and the kindness of like minded people.

Q: Can I help?

A: Absolutely! Simply sign up for the newsletter below, leave a comment with suggestions, and tell your friends about this. I'll be updating as frequently as I can.

Design Goals (WIP)

- Engaging and satisfying mech combat

- Generate maps from real world map data

- Multiple weapon types

- Local multiplier, stretch goal: asymmetric VR gameplay support

Q: Who is working on this?

A: Me, you, and our friends

Q: When is it done?

A: I've set a 1 month deadline to make the best game I can. The delivery date is April 7th, 2017.

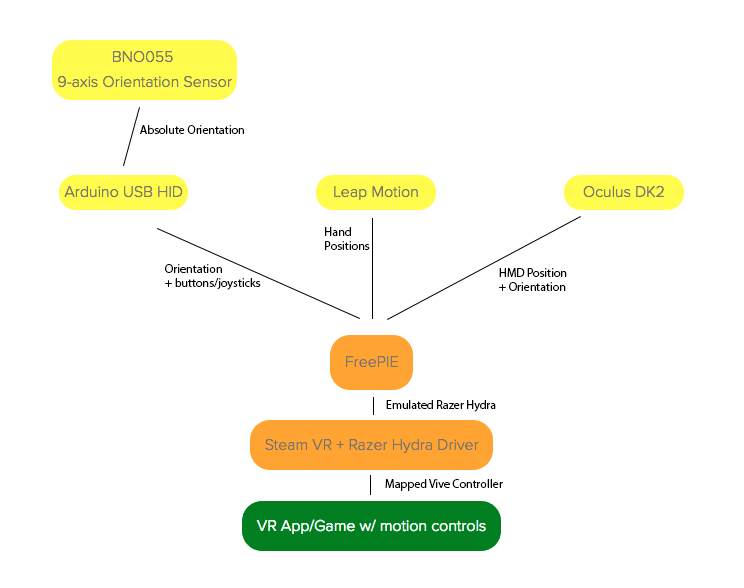

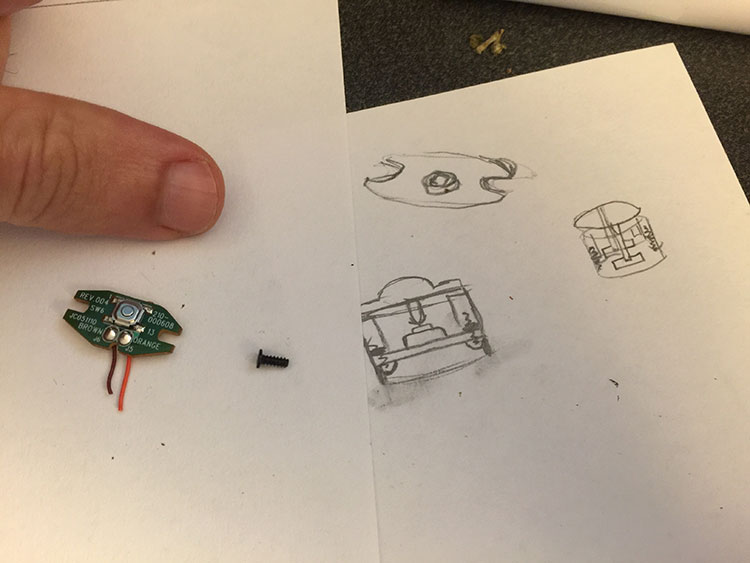

VR mech test using smartphone to reduce motion sickness. Click here for more info

Tests

Version History

- v0.1.5 - Camera follows Player 1 (Player 2 still controllable with XBOX controller on Windows build)

- v0.1.4 - Damage indicators, grenades

- v0.1.3 - Moving AI enemies

- v0.1.2 - AI enemies

- v0.1.1 - Added FPS counter, 'm' to mute music, check for negative health

- v0.1.0 - Initial release