Arduino Vive Controller Emulation

/The goal: DIY VR hand controls that emulate the HTC Vive controllers. It's a continuation of the experiments done here.

The delay on the video is not present in the VR headset

Using the Leap Motion's hand position fused with an Arduino's orientation sensor data, a high accuracy VR manipulation device can be assembled. Previous experiments revealed that object manipulation with the Leap Motion could be improved by fusing the data from additional sensors. A BNO055 9-axis orientation sensor fills gaps where the hands are obscured. The result prevents jarring movements that break VR immersion and create strange conditions for physics engines. Moreover, the controller doesn't need to be gripped since it's U-shaped design clasps the hand. This leaves the users' hands free to type or gesture. Finally, tactile switches and joysticks provide consistent behavior when interacting with precision or reflex based tasks, such as FPS games. The current-gen VR controllers such as the HTC Vive, Oculus Touch, and Sony Move controllers all incorporate various buttons, triggers, and touch-pads to fill this role since gesture-only input methods lack consistency. This experiment is somewhere in the middle.

Right Hand

The right hand prototype came first. As minimal as possible and built fast to start testing as soon as possible. Naturally, a lot of hot glue. Three repurposed arcade buttons, a tiny PSP style joystick, and two SMD micro switches for the Menu and System buttons. 28AWG wire connects everything to an Arduino Pro Micro (sourced on eBay).

Left Hand: The Second Attempt

One of the pieces of feedback was to use the rotation type joystick so I used a replacement Gamecube joystick from a previous project to try it out. Great feedback!

Through changing the joystick, I also adjusted some dimensions by extending the joystick forward and trying a slightly different button configuration. A lot of improvements to be done here still. Check out the github page for the 3D printer STL files. I'm waiting on a large lot of SMD micro switches to arrive to add the menu and system buttons. The smaller buttons will also let me shrink the design even further.

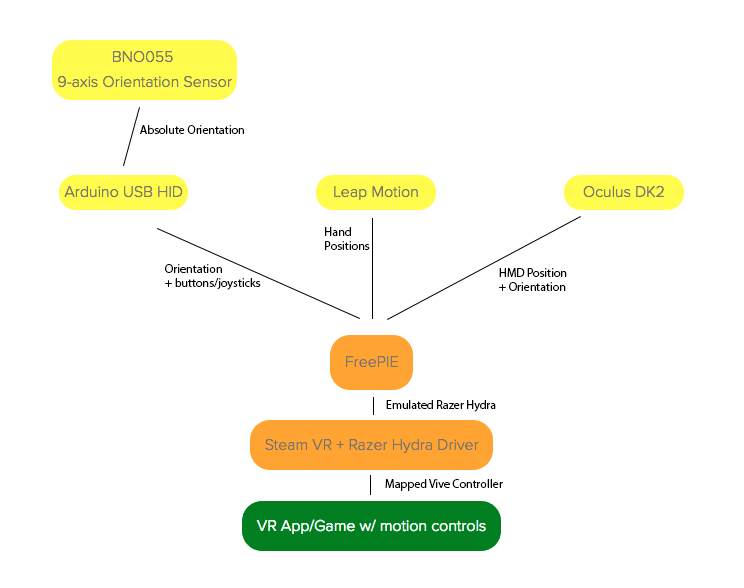

System Architecture

- Orientation Sensor - BNO055 from Adafruit

- Arduino - Pro Micro flashed as USB gamepad using NicoHood Arduino HID Project

- Leap Motion - nonverbal FreePIE plugin forum post using Orion SDK

- Oculus DK2 - (native FreePIE support)

- FreePIE software - programable input emulator

- Driver for Steam VR - Razer Hydra

The Math

The problem we're trying to solve is split up into two goals:

- Position (x,y,z) controller using the Leap Motion's hand position data - a function of the headset position and orientation because the Leap Motion sensor is mounted to the headset

- Orient (yaw, pitch, roll) controller using Arduino/orientation sensor - using absolute orientation from the 9axis BNO055 sensor

Linear Algebra, more specifically 4x4 matrix 3D transformations often used in computer graphics, is practically magic. Take the input from Leap sensor SDK and convert it to a value the Razer Hydra driver understands, while preserving translations, rotations, and offsets along the way.

Most of what's happening is summed up by the steps below, and the math source image on the right. I couldn't find a way to import NumPy to FreePIE, all the matrix math was rewritten in pure Python. Here's what the FreePIE script does:

- Wait for 'recenter' event, then store current headset position and orientation

- Continuously calculate how much the headset has translated and rotated since 'recenter' event

- Transform Leap hand data into the headset coordinate space

- Continue transform of Leap hands into calibrated (i.e. 'recenter' event) space

- Continue transform of Leap hand positions into Hydra space (compensating for offsets from calibration step throughout the steps above because of driver behavior*)

- Set Hydra position values from transformed Leap data

*The Hydra controllers seem to offset their calibration state of 0,0,0 so I had to guess the y and z values. Not understanding where the magic number was coming from fooled me for a long time. Moreover, if on recenter event the controllers aren't facing the base station, you could end up with the mirrored expected rotation behavior.

Optimizations in the code were left for another day. I tried to be obtuse as possible since I wasn't familiar with the subject matter. This ended up being a good idea because I'm not sure how I could have discovered the undocumented calibration behavior by the Steam VR Hydra driver.

The take away being: find the simplest test you can verify your assumptions with. Even if it's just a thought experiment, 90% of the time a problem is an incorrect assumption that can be revealed by a simple question.

As an added complication, until a new Leap driver is written, FreePIE only has access to the restricted values of the normalized interaction box volume rather than the full field of view. If you look closely at the video you can see the controller 'confined' to an invisible box. This isn't latency, it's the values clipping.

To simplify, the Arduino sends the orientation sensor data by assigning quaternions to four 16-bit axis: x,y,rx,ry. The thumbstick uses the 8-bit z and rz axis

Orienting the hands

First test calibrating, positioning, and orienting controller

Orienting the hands is done by a 9-axis BNO055 sensor, which returns absolute orientation relative to Earth's magnetic field. Instead of a serial connection to the computer, the orientation data is passed along as generic USB HID joystick values by the Arduino. In this way, there are no drivers to install and it's standards compliant on everything with a USB port (e.g. consoles). On the computer, the FreePIE script does the following:

- Read 16bit joystick values (x, y, rx, ry)

- Convert them back into orientation data (Quaternion)

- Convert to Euler angles (pitch, yaw, roll)

- Apply orientation relative to 'recenter' event from calibration

Joystick and Buttons

Mapping the joystick and buttons using USB HID library by NicoHood was straightforward. As a minor side-effect: the 16-bit axis registers are taken by the orientation data, which leaves only two 8-bit registers for the joystick x-y ... I'm not too concerned (right now).

Next steps

I tried a few free Steam VR games/demos. There were a few glitches very specific to developer implementation, but overall very satisfying to finally have access to all the Steam VR stuff. The Steam VR tutorial worked flawlessly. Additionally, the Steam VR UI and controlling the desktop PC while in VR is very satisfying.

However, passing the data over the joystick axis caused the UI to sometimes try to go left-right, and the trigger button didn't work in Rec Room. There was a Rec Room bug fix for the Razer Hydra's trigger button, which seems suspiciously to be the culprit that ignores the FreePIE's emulation of the Hydra's trigger. This resource was also useful in setting expectations of the system http://talesfromtherift.com/play-vive-vr-room-scale-games-with-the-oculus-rift-razer-hydra-motion-controllers/

Long story short, it's best to create a custom experience in Unity directly for the controller right now, while open standards are still being worked out. Each app has quirks for each motion controller to make it compatible with their specific code. A custom experience would best leverage both worlds: finger tracking + precise interaction.

"While the fuzzy notion of a cyberspace of data visualized in 3D is unlikely in a general sense, specialized visualization of data sets that \ \ you can "hold in your hand" and poke at with your other hand while moving your head around should be a really strong improvement."

Having access to some of the VR experiences that require motion controllers is spectacular. I hope these source files help some of you progress towards an open VR landscape, where everyone is on an equal playing field. While waiting for these standards, I'm going to keep working on the ergonomics and controls while conceiving of data sets in VR to visualize. Share your ideas in the comments below.